About TwineTwine NetworkContactList your dataset

About TwineTwine NetworkContactList your datasetVoxCeleb

VoxCeleb is a large-scale audio-visual speech dataset built from YouTube interview clips, widely used to train and benchmark deep speaker recognition models for speaker verification, speaker identification, and robust “in-the-wild” voice AI.

Files

1000000

Size

133 MB

Format

MP4

Duration

2,000+ hours

Country

Participants

7000

Languages

Updated

December 23, 2025

Description

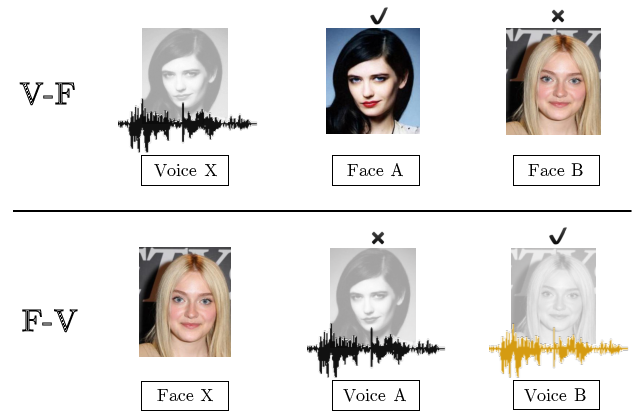

VoxCeleb is a multimodal dataset of short talking-face speech clips (each ≥ 3 seconds) curated from interview videos, designed for text-independent speaker recognition under realistic conditions like background noise, overlapping speech, laughter, pose variation, and changing lighting. It contains 7,000+ speakers, 1M+ utterances, and 2,000+ hours of audio-video data, making it a go-to benchmark for robustness testing, representation learning, and production-grade speaker embedding evaluation.

2,000 + hours. Each file ≥ 3 seconds

Dataset Technical Specification

Number of files:

1000000

Total dataset size:

133 MB

Duration:

2,000+ hours

Format:

MP4

Sample rate:

Resolution:

Dataset Demographics

📍 Country:

🧍 Gender:

Male: 61%; Female: 39%

📅 Age:

👥 Number of participants:

7000